Everything You Should Understand About Voice AI – Part 2 of 3

We have spent a lot of time talking about flow, efficiency, and impressive demos. Voices that sound human. Conversations that seem natural in controlled tests. But behind the velvety voices lies a problem that is rarely discussed openly – and one that should keep any leader, security officer, and board member awake at night.

The question isn't whether the AI understands what the customer is saying. The question is: Who else has access to the voice?

The voice is not data. It is biometric.

We often treat speech as if it were text in an email or a field in a CRM system. This is a fundamental misjudgment.

A voice is biometric. It is a unique, personal identifier. It reveals identity, age, health status, emotions, and in many cases also cultural and geographical affiliation. In the wrong hands, the voice is raw material for identity theft, social manipulation, and deepfake fraud.

Nevertheless, we see companies that would never send their customer register unencrypted across borders, yet real-time audio of customer calls streams directly into global cloud services – often without full visibility of where the data is actually processed, stored, or reused.

Architecture is security

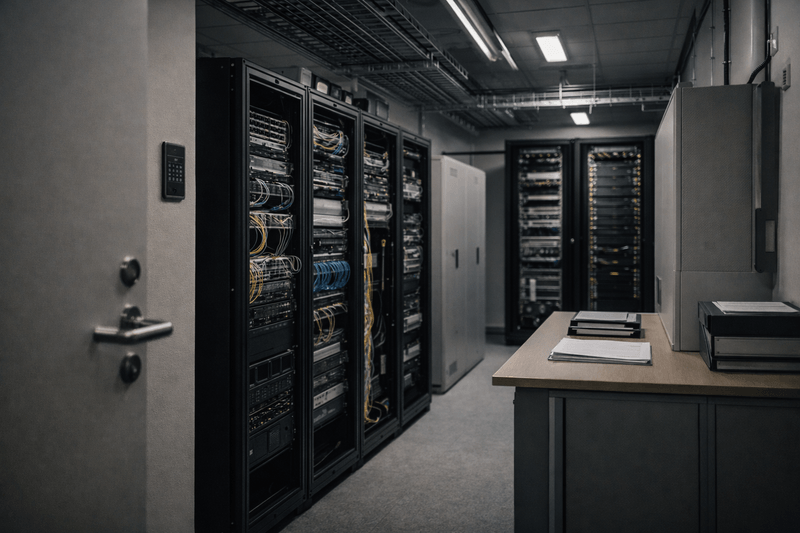

The problem with many Voice AI solutions today is not the intention, but the architecture. They are built for functionality and speed of development – not for ownership, control, and long-term security.

In a typical cloud-based Voice AI architecture, the same thing happens every time – regardless of provider:

First, the conversation is lifted out of the closed telecommunication network. Then, the audio is re-encoded and sent over the open internet. Next, it is processed in an external cloud, often in a different jurisdiction. Finally, both audio and transcriptions may remain as training data or log material.

Every step is a potential breaking point. Every jump is a loss of control.

This is not a question of whether the provider will do something wrong. It is a question of who can do something wrong when the architecture itself requires biometric data to leave your control.

The Legal Blind Spot

For the public sector, banking, insurance, and healthcare, this is not a theoretical discussion. It is a real legal challenge.

When biometric data crosses national borders, immediate gray areas arise related to GDPR, access rights, deletion, preparedness, and responsibility. Many are reassured by the fact that the provider offers a "secure portal" or "enterprise-grade security," but if the architecture itself requires the voice to take a detour through global cloud environments to be understood, the race is already effectively over.

Safety cannot be added afterwards. It must be built in.

Infrastructure beats function

Traditional telephony is not glamorous. It is not designed for demos. But it is built on principles of control, closed signal paths, and clear ownership of the conversation.

It's easy to underestimate the value of this in a time when everything can be connected to an API. But the truth is simple:

If you do not control the infrastructure that the voice goes over, you do not control the security either.

Voice AI that operates outside of the communication network is a vulnerability. Voice AI embedded within the core of the infrastructure – where processing occurs locally and sovereignly – is a completely different matter.

Five questions you should ask before the next demo

Before you get impressed by the next Voice AI presentation, you should be able to clearly answer some basic questions:

Where does the "door" actually close off the sound flow – in Norway, or on a server in another part of the world? What legislation actually protects biometric data, both in transit and at rest? Is voice data used further for training models – and if so, under what conditions, with what consent, and with whose ownership? And who has real control over the conversation's lifecycle if something goes wrong?

Voice AI is no longer an experiment. It is the company's new frontline. It is the voice customers hear before they meet a human.

Delegating the voice without understanding the architecture behind it is not innovation. It is abdication of responsibility.

Part 3 looks ahead: What actually becomes possible when building intelligence on top of communication infrastructure – and why this is not just about technology, but about who owns the customer dialogue of the future.